The world in our heads is not a precise replica of reality; our expectations about the frequency of events are distorted by the prevalence and emotional intensity of the messages to which we are exposed.

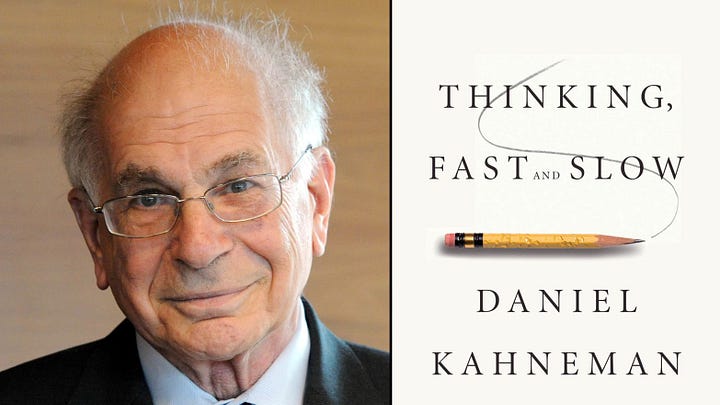

“Thinking, Fast and Slow” by Daniel Kahneman is a thought-provoking book that highlights the cognitive biases and thinking patterns that affect human decision-making. Here are some key takeaways from the book to improve your decision-making:

- Dual Process Theory of the Mind: Kahneman introduced the Dual Process Theory of the Mind, which distinguishes between two modes of thinking – System 1 and System 2.

- System 1 is the part of the brain that acts intuitively and suddenly, often without conscious control. The vital advantages lie in the ability to make quick decisions and judgments.

- System 2 is what we mean when we imagine the part of the brain responsible for an individual’s decision-making, reasoning, and beliefs. It controls conscious activities of the mind such as self-control, choice, and intentional focus.

- Understanding the workings of these two systems can help us improve our decision-making.

To see how the two systems work, try solving the following famous stick-and-ball problem:

A bat and ball costs $1.10. The bat is $1 more expensive than the ball. So how much does the ball cost?

The price that comes to mind, $0.10 is the result of 1 emotional and automated system, and it’s working!

Take a few seconds and try to solve this problem.

Do you see your error? The correct answer is $0.05.

What just happened is your impulsive System 1 takes over and automatically responds by relying on gut feelings. But it responds too fast.

Normally, when faced with an unclear situation, System 1 would call System 2 to solve the problem, but in the bat and ball problem, System 1 was fooled. It looks at the problem too simple, and mistakenly believes it can be mastered.

The stick-and-ball problem exposes our instinct for lazy mental labor. When the brain is active, we usually use only the minimum amount of energy that is sufficient for that task. This is also known as the law of least effort. Because reviewing answers with System 2 uses more energy, the mind won’t do so when it thinks just using System 1 is enough. Even though System 2 is useful, it takes effort and energy to engage it. So, it tends to take shortcuts at the behest of System 1

- Major fallacies because of two modes of thinking

- Priming: Our minds are wonderful associative machines, allowing us to easily associate words like “lime” with “green”. Because of this, we are susceptible to priming, in which a common association is invoked to move us in a particular direction or action. This is the basis for “nudges” and advertising using positive imagery.

- Cognitive Ease: Whatever is easier for System 2 is more likely to be believed. Ease arises from idea repetition, clear display, a primed idea, and even one’s own good mood. It turns out that even the repetition of falsehood can lead people to accept it, despite knowing it’s untrue, since the concept becomes familiar and is cognitively easy to process.

- Jumping to Conclusions: Our System 1 is “a machine for jumping to conclusions” by basing its conclusion on “What You See Is All There Is” (WYSIATI). WYSIATI is the tendency for System 1 to draw conclusions based on the readily available, sometimes misleading information and then, once made, to believe in those conclusions fervently. The measured impact of halo effects, confirmation bias, framing effects, and base-rate neglect are aspects of jumping to conclusions in practice.

- The Planning Fallacy: The planning fallacy is the tendency to underestimate the time, effort, and resources required to complete a task or achieve a goal. This can lead to unrealistic expectations and poor planning.

- Emotions and Decision-making: Kahneman explores how emotions can influence decision-making, sometimes in ways that we may not even be aware of. For example, the “affect heuristic” refers to the tendency to rely on our emotions when making judgments about risk or probability.

- Biases/Effects impacting our Decision Making

- The Primacy Effect : Kahneman describes how the first impression we form about a person, event, or product can significantly influence our subsequent judgments. This is called the primacy effect.

- The Halo effect: refers to how our positive impression of one aspect of a person or thing can color our perception of other unrelated attributes.

- Confirmation Bias: Confirmation bias is the tendency to favor information that confirms our preexisting beliefs or hypotheses while disregarding or downplaying contradictory evidence. This bias can lead to overconfidence and faulty decision-making. For example, a person who believes in a certain conspiracy theory may only seek out information that supports that theory, while disregarding or downplaying contradictory evidence.

- Anchoring Bias: Anchoring bias is the tendency to rely too heavily on the first piece of information (the anchor) when making subsequent judgments or decisions. This can lead to overestimation or underestimation of values, depending on the initial anchor. For example, a person may be more likely to offer a higher price for a product if the initial price suggested to them is high, even if the product’s actual value is much lower.

- The Illusion of Understanding and the Illusion of Control: The illusion of understanding refers to the tendency to believe that we understand complex systems or phenomena better than we actually do. This can lead to faulty decision-making and inaccurate predictions. The illusion of control, on the other hand, is the belief that we have more control over events and outcomes than we actually do. This can lead to overconfidence and poor risk assessment. For example, a gambler may believe that their skill in playing a game of chance gives them more control over the outcome, leading them to continue playing even when the odds are against them

- Loss Aversion: Loss aversion refers to the tendency to place more weight on avoiding losses than on achieving gains of equal value. This can lead to risk aversion and missed opportunities.

- Framing Effects: Framing effects refer to how the same information presented in different ways can lead to different decisions or judgments. This highlights the importance of how information is presented and communicated.

- Prospect Theory: Prospect theory is a theory of decision-making that suggests people make decisions based on the potential value of gains and losses, rather than the absolute value of the outcomes. For example, a person may be more willing to take a risk if the potential gains are high, even if the probability of success is low.

- Role of Heuristics: We often find ourselves in situations where we have to make quick judgments. To do this, our minds have developed little shortcuts to help us instantly make sense of our surroundings. These are called heuristics.

For the most part, these processes are very useful, but the problem is that our minds often overuse them. Applying these rules in inappropriate situations can lead to mistakes. To better understand what heuristics are and the errors that follow, we can consider two types:- Alternative heuristics occurs when we answer an easier question than the one actually asked. For example, try this question: “A woman is running for sheriff. How successful will she be in that ministry?” We automatically replace the question we should have answered with an easier one, like, “Does she look like someone who would make a good sheriff?” This experimentation means that instead of researching a candidate’s profile and policies, we are simply asking ourselves the much easier question of whether this woman fits our mental image of a candidate- good sheriff or not.

Unfortunately, if she doesn’t fit that mental image, we’ll throw her out – even though she has years of crime fighting experience, which makes her a good candidate. - Built-in heuristics , which is when you think something is more likely to happen just because you hear about it more often, or find it easier to remember. For example, strokes cause more deaths than traffic accidents, but one study found that 80% of respondents thought more people died from traffic accidents.

That’s because we hear more about these deaths in the media, and because they leave a deeper impression; We remember deaths from a horrible accident more easily than from a stroke, and so we are more likely to react inappropriately to these dangers.

- Alternative heuristics occurs when we answer an easier question than the one actually asked. For example, try this question: “A woman is running for sheriff. How successful will she be in that ministry?” We automatically replace the question we should have answered with an easier one, like, “Does she look like someone who would make a good sheriff?” This experimentation means that instead of researching a candidate’s profile and policies, we are simply asking ourselves the much easier question of whether this woman fits our mental image of a candidate- good sheriff or not.

In summary, “Thinking, Fast and Slow” provides valuable insights into the biases and thinking patterns that affect our decision-making. By understanding these biases, we can improve our decision-making and avoid common pitfalls. I personally read the book “Thinking, Fast and Slow” by Daniel Kahneman and it drastically changed how I think.

You can check out the book here

To get the free ebook of this wonderful book, you can drop your request in the comment section.